Introduction

First of all what is weighted random? Let’s say you have a list of items and you want to pick one of them randomly. Doing this seems easy as all that’s required is to write a litte function that generates a random index referring to the one of the items in the list. But sometimes plain randomness is not enough, we want random results that are biased or based on some probability. This is where the weighted random generation algorithm needed.

Scenarios

There are lots of real world scenarios that need weighted random. Such as load balancers(like nginx, haproxy etc). Following is an example configuration of nginx. In the example, backend1 has weight 5; the other two servers have the default weight 1, With this configuration of weights, out of every seven requests, five are sent to backend1 and one to backend2 one to backend3.

http {

upstream backend {

server backend1 weight=5;

server backend2;

server backend3;

}

}

Another example is crawler scheduling. When I was developing a concurrent crawling framework last year, I need to schedule the crawling tasks according to the task importenceness. The tasks importenceness are expressed by float value weights that are mannually assigned to each site that tasks belong to. So there should be a WeightedChoice function on the scheduler of the crawler system that determines which task should be scheduled the next time.

In the negative sampling part of the famous word2vec, the algorithm needs to randomly sample some negative words according to their frequencies in the corpus. Codes link.

There are more examples in game developing: In games we often encounter random dropping of specified items by certain drop probability, such as falling silver coins 25%, gold coins 20%, diamonds 10%, equipment 5%, accessories 40%. The next dropped item type is now required to meet the above probability.

Solutions

Solution 1

The first method came up to me is to extend the uniform distributed random number generator. Let’s begin with an example(all example programmes here after will be writen in Golang):

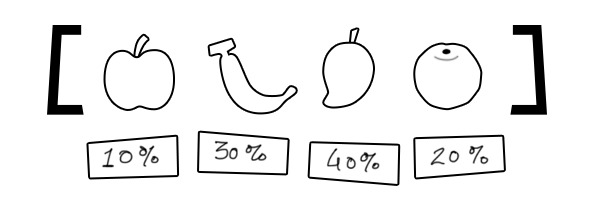

var items = []int{0, 1, 2, 3}

var weights = []float32{0.1, 0.3, 0.4, 0.2}

Making them conform to the weights what we’d do is something simple. Basically repeat the items 10x or even 100x times based on the numbers we have. So let’s say we’re repeating 10x times, this is the list we’ll end up with:

var choices = []int{0, 1, 1, 1, 2, 2, 2, 2, 3, 3}

Then we can randomly choose a value from choices, and we are done. Full codes bellow:

package main

import (

"fmt"

"math/rand"

)

func WeightedRandomS1(weights []float32) int {

if len(weights) == 0 {

return 0

}

var choices []int

for i, w := range weights {

wi := int(w * 10)

for j := 0; j < wi; j++ {

choices = append(choices, i)

}

}

return choices[rand.Int()%len(choices)]

}

func main() {

for i := 0; i < 100; i++ {

fmt.Println(WeightedRandom([]float32{0.1, 0.3, 0.6}))

}

}

In word2vec, this solution is adopted.

Solution 2

The first solution takes too much memory, then came solution 2: Compute the discrete cumulative density function (CDF) of the list – or in simple terms the array of cumulative sums of the weights. Then generate a random number in the range between 0 and the sum of all weights, do a linear search to find this random number in your discrete CDF array and get the value corresponding to this entry – this is the weighted random number.

func WeightedRandomS2(weights []float32) int {

if len(weights) == 0 {

return 0

}

var sum float32 = 0.0

for _, w := range weights {

sum += w

}

r := rand.Float32() * sum

for i, w := range weights {

r -= w

if r < 0 {

return i

}

}

return len(weights) - 1

}

I used this solution in the scheduler of crawling framework.

Solution 3

Adopting binary search over the CDF array could reduce the time complexity from to .

func WeightedRandomS3(weights []float32) int {

n := len(weights)

if n == 0 {

return 0

}

cdf := make([]float32, n)

var sum float32 = 0.0

for i, w := range weights {

if i > 0 {

cdf[i] = cdf[i-1] + w

} else {

cdf[i] = w

}

sum += w

}

r := rand.Float32() * sum

var l, h int = 0, n - 1

for l <= h {

m := l + (h-l)/2

if r <= cdf[m] {

if m == 0 || (m > 0 && r > cdf[m-1]) {

return m

}

h = m - 1

} else {

l = m + 1

}

}

return -1

}

Solution 4

The optimal solution for weighted random should be the Alias Method. It requires time to initialize, time to make a selection, and memory. A golang version implementation is here.

Algorithm: Vose’s Alias Method

Initialization:

- Create arrays and , each of size .

- Create two worklists, and .

- Multiply each probability by .

- For each scaled probability :

- If , add to .

- Otherwise , add to .

- While and are not empty: ( might be emptied first)

- Remove the first element from ; call it .

- Remove the first element from ; call it .

- Set .

- Set .

- Set . (This is a more numerically stable option)

- If , add to .

- Otherwise , add to .

- While is not empty:

- Remove the first element from ; call it .

- Set .

- While is not empty: This is only possible due to numerical instability.

- Remove the first element from ; call it .

- Set .

Generation:

- Generate a fair die roll from an n-sided die; call the side .

- Flip a biased coin that comes up heads with probability .

- If the coin comes up “heads”, return .

- Otherwise, return .

References

- Walker, A. J. 1977. “An efficient method for generating discrete random variable with general distributions.” ACM Transactions on Mathematical Software 3 253–256.

- “Darts, Dice, and Coins: Sampling from a Discrete Distribution”. Keith Schwarz, December 29, 2011

- Alias method. Wikipedia, April 5, 2018

- Weighted random selection from array , stackoverflow, Dec 16 2010